Pandas

Reading data from into Pandas

The Python package pandas is widely-used to read and manipulate data in tabular or similar form. One of the most common tabular data formats is CSV.

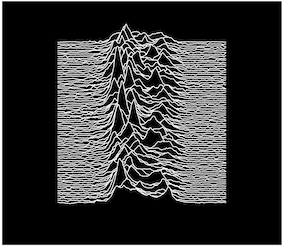

An interesting CSV-format dataset is that containing the successive pulses of the oscillation signal coming from the Pulsar PSR B1919+21 discovered by Jocelyn Bell in 1967. Some of you might also recognise it from a famous album cover

By inspection we can see the data are 80 lines of 300 columns of data. The data format is simple, with no missing values or metadata. We can straightforwardly use the pandas function pd.read_csv, specifying the URL, to read this dataset (specifying only header=None so that the first line is not interpreted as data column names).

import pandas as pd

from urlpath import URL

site = 'https://raw.githubusercontent.com'

site_dir = 'igorol/unknown_pleasures_plot/master'

site_file = 'pulsar.csv'

url = URL(site,site_dir,site_file)

df=pd.read_csv(url.as_posix(),header=None)

df

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 290 | 291 | 292 | 293 | 294 | 295 | 296 | 297 | 298 | 299 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -0.81 | -0.91 | -1.09 | -1.00 | -0.59 | -0.82 | -0.43 | -0.68 | -0.71 | -0.27 | ... | -0.08 | 0.19 | -0.19 | -0.18 | -0.20 | -0.26 | -0.52 | -0.44 | -0.58 | -0.54 |

| 1 | -0.61 | -0.40 | -0.42 | -0.38 | -0.55 | -0.51 | -0.71 | -0.79 | -0.52 | -0.40 | ... | -0.34 | -0.58 | -0.26 | -0.64 | -1.05 | -0.83 | -0.80 | -0.47 | -0.13 | -0.12 |

| 2 | -1.43 | -1.15 | -1.25 | -1.13 | -0.76 | -0.25 | 0.40 | 0.26 | 0.30 | 0.36 | ... | -0.29 | 0.16 | 0.83 | 0.99 | 1.28 | 0.11 | -0.77 | -0.88 | -0.45 | -1.01 |

| 3 | -1.09 | -0.85 | -0.72 | -0.74 | -0.26 | -0.04 | -0.19 | 0.18 | 0.03 | 0.19 | ... | 0.48 | 0.52 | -0.14 | -1.13 | -1.07 | -1.03 | -0.78 | -0.40 | 0.18 | 0.27 |

| 4 | -1.13 | -0.98 | -0.93 | -0.90 | -1.14 | -1.00 | -0.90 | -1.18 | -1.30 | -1.07 | ... | -0.27 | -0.47 | -0.49 | -0.23 | -0.75 | -0.29 | -0.54 | -0.65 | -0.64 | -0.94 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 75 | 0.62 | 0.64 | 0.59 | 0.30 | 0.01 | 0.05 | -0.63 | 0.07 | 0.36 | 0.78 | ... | 0.20 | 0.22 | 0.23 | 0.27 | -0.10 | -0.21 | -0.09 | -0.24 | -0.17 | -0.62 |

| 76 | 0.32 | 0.31 | 0.28 | 0.42 | -0.24 | -0.48 | -0.73 | -0.64 | 0.04 | 0.02 | ... | -0.44 | -0.53 | -0.50 | -0.49 | -0.63 | -0.56 | -0.50 | -0.38 | -0.58 | -0.43 |

| 77 | -0.09 | -0.14 | -0.24 | -0.24 | -0.66 | 0.00 | 0.29 | 0.29 | 0.60 | 0.86 | ... | 0.08 | -0.88 | -1.17 | -0.36 | -0.31 | -0.12 | 0.29 | -0.02 | 0.21 | 0.44 |

| 78 | 0.11 | 0.05 | 0.05 | -0.05 | -0.03 | -0.29 | -0.08 | -0.54 | -0.01 | 0.01 | ... | -0.73 | -0.54 | -0.53 | -0.92 | -0.68 | -0.87 | -1.31 | -1.02 | -1.10 | -1.62 |

| 79 | 0.12 | -0.12 | -0.12 | -0.45 | -0.24 | -0.48 | -0.57 | -0.19 | -0.07 | -0.59 | ... | 0.12 | 0.03 | -0.28 | 0.02 | -0.01 | 0.13 | 0.09 | -0.01 | -0.03 | -0.23 |

80 rows × 300 columns

pandas transpose

In this format, we have time as columns and sample number in the rows. In many cases, we may wish to view the dataset 'the other way around', i.e. with rows as time and columns as sample number. This is achieved with the transpose operation:

import pandas as pd

from urlpath import URL

site = 'https://raw.githubusercontent.com'

site_dir = 'igorol/unknown_pleasures_plot/master'

site_file = 'pulsar.csv'

url = URL(site,site_dir,site_file)

# transpose the dataset

df=pd.read_csv(url.as_posix(),header=None).transpose()

df

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 70 | 71 | 72 | 73 | 74 | 75 | 76 | 77 | 78 | 79 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -0.81 | -0.61 | -1.43 | -1.09 | -1.13 | -0.66 | -0.36 | -0.73 | -0.89 | -0.69 | ... | 0.00 | -0.16 | 0.19 | -0.32 | -0.16 | 0.62 | 0.32 | -0.09 | 0.11 | 0.12 |

| 1 | -0.91 | -0.40 | -1.15 | -0.85 | -0.98 | -0.89 | -0.21 | -0.83 | -0.61 | -0.54 | ... | -0.12 | -0.15 | 0.06 | -0.83 | -0.26 | 0.64 | 0.31 | -0.14 | 0.05 | -0.12 |

| 2 | -1.09 | -0.42 | -1.25 | -0.72 | -0.93 | -0.87 | -0.44 | -0.91 | -0.74 | -0.84 | ... | 0.10 | 0.25 | -0.27 | -0.69 | -0.36 | 0.59 | 0.28 | -0.24 | 0.05 | -0.12 |

| 3 | -1.00 | -0.38 | -1.13 | -0.74 | -0.90 | -0.87 | -0.20 | -1.10 | -0.85 | -0.89 | ... | -0.01 | 0.37 | -0.11 | -0.80 | -0.49 | 0.30 | 0.42 | -0.24 | -0.05 | -0.45 |

| 4 | -0.59 | -0.55 | -0.76 | -0.26 | -1.14 | -1.07 | -0.31 | -0.87 | -0.77 | -0.45 | ... | -0.15 | -0.13 | 0.09 | -0.76 | 0.00 | 0.01 | -0.24 | -0.66 | -0.03 | -0.24 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 295 | -0.26 | -0.83 | 0.11 | -1.03 | -0.29 | -0.55 | -1.45 | -1.20 | -0.94 | -0.16 | ... | 0.47 | 0.10 | -0.06 | 0.08 | 0.28 | -0.21 | -0.56 | -0.12 | -0.87 | 0.13 |

| 296 | -0.52 | -0.80 | -0.77 | -0.78 | -0.54 | -0.62 | -0.77 | -1.40 | -1.05 | 0.24 | ... | 0.41 | 0.02 | -0.08 | -0.15 | -0.01 | -0.09 | -0.50 | 0.29 | -1.31 | 0.09 |

| 297 | -0.44 | -0.47 | -0.88 | -0.40 | -0.65 | -0.71 | 0.03 | -0.51 | -0.51 | -0.17 | ... | 0.32 | -0.10 | -0.04 | 0.03 | -0.67 | -0.24 | -0.38 | -0.02 | -1.02 | -0.01 |

| 298 | -0.58 | -0.13 | -0.45 | 0.18 | -0.64 | -0.88 | 0.47 | 0.25 | -0.47 | -0.09 | ... | 0.57 | -0.16 | 0.23 | 0.03 | -0.86 | -0.17 | -0.58 | 0.21 | -1.10 | -0.03 |

| 299 | -0.54 | -0.12 | -1.01 | 0.27 | -0.94 | -0.70 | 1.33 | 0.74 | -0.79 | 0.01 | ... | 0.48 | -0.06 | -0.10 | -0.54 | -1.66 | -0.62 | -0.43 | 0.44 | -1.62 | -0.23 |

300 rows × 80 columns

We will use this transposed dataset in future exercises. so make sure you remember how to do this operation.

pandas format and read_table

Not all data files we find on the web may be so straightforward to read though (Hint: one of the files you will use in Part A of your assessed practical is like this!). In 020_Python_files we saw data of Monthly Southeast England precipitation (mm) in a tabular form on the Met Office website. We would commonly use the Python package pandas to read and analyse such data.

But the data format is actually quite complex and we can't specify complex formats for reading directly from a URL in pandas.

Instead, in such cases, we need to download the file first, in much the same way we did for MODIS data earlier (but a text file this time, and no password needed).

from urlpath import URL

from pathlib import Path

# NB -- avoid trailing / on these

# i.e. dont put

# site_dir = 'hadobs/hadukp/data/monthly/'

# or it wont work!

site = 'https://www.metoffice.gov.uk/'

site_dir = 'hadobs/hadukp/data/monthly'

site_file = 'HadSEEP_monthly_totals.txt'

url = URL(site,site_dir,site_file)

r = url.get()

if r.status_code == 200:

# setup Path object for output file

filename = Path('work',url.name)

# write text data

filename.write_text(r.text)

# check size and report

print(f'file {filename} written: {filename.stat().st_size} bytes')

else:

print(f'failed to get {url}')

file work/HadSEEP_monthly_totals.txt written: 15209 bytes

Now we want to read this file work/HadSEEP_monthly_totals.txt into pandas.

By inspection, the dataset is seen to have a variable number of spaces between the data columns. This is known as 'whitespace' (i.e. or \t characters). This makes it more complex to read into pandas than a CSV format, and we need to specify a regular expression meaning 'one or more space'. This is r"[ ]{1,}" and we give the keyword sep for pandas as sep=r"[ ]{1,}". Further for pandas in this case we must specify that we should use the Python engine to interpret engine='python'. Other features of the dataset are that the first 3 rows of data are metadata and should be skipped in reading the dataset: skiprows=3, with the 4th line the data column headers. Finally, we see that 'no data' values are given here as the value -99.9: na_values=[-99.9].

Since there are quite a few keyword options to use, we might find it convenient to gather these into a dictionary:

import pandas as pd

panda_format = {

'skiprows' : 3,

'na_values' : [-99.9],

'sep' : r"[ ]{1,}",

'engine' : 'python'

}

filename = Path('work','HadSEEP_monthly_totals.txt')

df=pd.read_table(filename,**panda_format)

df.head()

| Year | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | Annual | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1873 | 87.1 | 50.4 | 52.9 | 19.9 | 41.1 | 63.6 | 53.2 | 56.4 | 62.0 | 86.0 | 59.4 | 15.7 | 647.7 |

| 1 | 1874 | 46.8 | 44.9 | 15.8 | 48.4 | 24.1 | 49.9 | 28.3 | 43.6 | 79.4 | 96.1 | 63.9 | 52.3 | 593.5 |

| 2 | 1875 | 96.9 | 39.7 | 22.9 | 37.0 | 39.1 | 76.1 | 125.1 | 40.8 | 54.7 | 137.7 | 106.4 | 27.1 | 803.5 |

| 3 | 1876 | 31.8 | 71.9 | 79.5 | 63.6 | 16.5 | 37.2 | 22.3 | 66.3 | 118.2 | 34.1 | 89.0 | 162.9 | 793.3 |

| 4 | 1877 | 146.0 | 47.7 | 56.2 | 66.4 | 62.3 | 24.9 | 78.5 | 82.4 | 38.4 | 58.1 | 144.5 | 54.2 | 859.6 |

Exercise 1

The file 2276931.csv contains precipitation data for an NOAA weather station HAVANA 4.2 SW, FL US for the year 2020 to date.

The dataset URL is:

https://raw.githubusercontent.com/UCL-EO/geog0111/master/notebooks/data/2276931.csv

- Inspect the file to discover any issues you must account for.

- Download the file and read into

pandas - print the first 5 lines of data

Selecting data in pandas, and datetime

Whilst it is a good start to be able to load a dataset into a dataFrame using pandas, we need to be able to select data from this.

import pandas as pd

from urlpath import URL

from pathlib import Path

site = 'https://www.metoffice.gov.uk/'

site_dir = 'hadobs/hadukp/data/monthly'

site_file = 'HadSEEP_monthly_totals.txt'

url = URL(site,site_dir,site_file)

r = url.get()

if r.status_code == 200:

# setup Path object for output file

filename = Path('work',url.name)

# write text data

filename.write_text(r.text)

# check size and report

print(f'file {filename} written: {filename.stat().st_size} bytes')

else:

print(f'failed to get {url}')

panda_format = {

'skiprows' : 3,

'na_values' : [-99.9],

'sep' : r"[ ]{1,}",

'engine' : 'python'

}

df_had=pd.read_table(filename,**panda_format)

# df.head: first n lines

df_had.head()

file work/HadSEEP_monthly_totals.txt written: 15209 bytes

| Year | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | Annual | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1873 | 87.1 | 50.4 | 52.9 | 19.9 | 41.1 | 63.6 | 53.2 | 56.4 | 62.0 | 86.0 | 59.4 | 15.7 | 647.7 |

| 1 | 1874 | 46.8 | 44.9 | 15.8 | 48.4 | 24.1 | 49.9 | 28.3 | 43.6 | 79.4 | 96.1 | 63.9 | 52.3 | 593.5 |

| 2 | 1875 | 96.9 | 39.7 | 22.9 | 37.0 | 39.1 | 76.1 | 125.1 | 40.8 | 54.7 | 137.7 | 106.4 | 27.1 | 803.5 |

| 3 | 1876 | 31.8 | 71.9 | 79.5 | 63.6 | 16.5 | 37.2 | 22.3 | 66.3 | 118.2 | 34.1 | 89.0 | 162.9 | 793.3 |

| 4 | 1877 | 146.0 | 47.7 | 56.2 | 66.4 | 62.3 | 24.9 | 78.5 | 82.4 | 38.4 | 58.1 | 144.5 | 54.2 | 859.6 |

This dataset has column titles Year Jan Feb Mar Apr ... Annual. We can get the list of column titles as df_had.columns:

print(df_had.columns)

Index(['Year', 'Jan', 'Feb', 'Mar', 'Apr', 'May', 'Jun', 'Jul', 'Aug', 'Sep',

'Oct', 'Nov', 'Dec', 'Annual'],

dtype='object')

Sometimes it is useful to convert this to a list, for list selection in this example:

cols = list(df_had.columns)

for c in cols[1:-1]:

print(c)

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

To select a column, we can use any of these column names as a key, in the same way as in using a dictionary:

df_had['Jan']

0 87.1

1 46.8

2 96.9

3 31.8

4 146.0

...

145 80.9

146 34.0

147 66.9

148 115.6

149 24.3

Name: Jan, Length: 150, dtype: float64

Or multiple columns, for example only the month datasets here:

months = list(df_had.columns)[:-1]

df_had_m = df_had[months]

df_had_m.head()

| Year | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1873 | 87.1 | 50.4 | 52.9 | 19.9 | 41.1 | 63.6 | 53.2 | 56.4 | 62.0 | 86.0 | 59.4 | 15.7 |

| 1 | 1874 | 46.8 | 44.9 | 15.8 | 48.4 | 24.1 | 49.9 | 28.3 | 43.6 | 79.4 | 96.1 | 63.9 | 52.3 |

| 2 | 1875 | 96.9 | 39.7 | 22.9 | 37.0 | 39.1 | 76.1 | 125.1 | 40.8 | 54.7 | 137.7 | 106.4 | 27.1 |

| 3 | 1876 | 31.8 | 71.9 | 79.5 | 63.6 | 16.5 | 37.2 | 22.3 | 66.3 | 118.2 | 34.1 | 89.0 | 162.9 |

| 4 | 1877 | 146.0 | 47.7 | 56.2 | 66.4 | 62.3 | 24.9 | 78.5 | 82.4 | 38.4 | 58.1 | 144.5 | 54.2 |

To select data rows, we can set some condition as a mask.

df_had_m[df_had_m['Year'] > 2000].head()

| Year | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 128 | 2001 | 93.1 | 92.9 | 122.4 | 75.7 | 32.2 | 25.8 | 51.5 | 83.4 | 70.1 | 133.0 | 43.0 | 25.2 |

| 129 | 2002 | 69.4 | 79.1 | 44.6 | 42.9 | 78.4 | 58.1 | 69.5 | 43.2 | 37.5 | 88.4 | 175.4 | 137.3 |

| 130 | 2003 | 80.1 | 27.6 | 25.6 | 35.6 | 45.3 | 49.5 | 46.4 | 16.2 | 11.0 | 52.8 | 142.6 | 74.3 |

| 131 | 2004 | 86.3 | 29.7 | 40.5 | 81.8 | 48.7 | 31.1 | 52.0 | 101.8 | 26.7 | 121.0 | 33.4 | 55.9 |

| 132 | 2005 | 34.4 | 21.0 | 48.8 | 46.5 | 28.2 | 35.7 | 57.3 | 49.9 | 48.5 | 91.5 | 50.0 | 64.9 |

The selection of years was straightforward in that example, but sometimes the date can be encoded differently.

Let's generate a test example to see this where we encode the date as

# generate date strings

dates = [f'2000-{m:>02d}-01' for m in range(1,13)]

# put in DataFrame

df = pd.DataFrame(dates,columns=["YY-MM-DD"])

# add a column of some values

values = [m*m for m in range(1,13)]

df["VALUES"] = values

df

| YY-MM-DD | VALUES | |

|---|---|---|

| 0 | 2000-01-01 | 1 |

| 1 | 2000-02-01 | 4 |

| 2 | 2000-03-01 | 9 |

| 3 | 2000-04-01 | 16 |

| 4 | 2000-05-01 | 25 |

| 5 | 2000-06-01 | 36 |

| 6 | 2000-07-01 | 49 |

| 7 | 2000-08-01 | 64 |

| 8 | 2000-09-01 | 81 |

| 9 | 2000-10-01 | 100 |

| 10 | 2000-11-01 | 121 |

| 11 | 2000-12-01 | 144 |

To filter this form of date description, we need to use pd.to_datetime. The easiest way to do this is to create an additional column with the datetime object:

df['DATE'] = pd.to_datetime(df["YY-MM-DD"])

Now we can access datetime fields such asdf['DATE'].dt.year,df['DATE'].dt.month from this, and use these to select rows of data. We combine multiple selection criteria with logical operators and : &, or : | and not : ~:

# print months with index > 4 and <= 7

df[(df['DATE'].dt.month > 4) & (df['DATE'].dt.month <= 7)]

| YY-MM-DD | VALUES | DATE | |

|---|---|---|---|

| 4 | 2000-05-01 | 25 | 2000-05-01 |

| 5 | 2000-06-01 | 36 | 2000-06-01 |

| 6 | 2000-07-01 | 49 | 2000-07-01 |

Hint: Take note of how to filter datetime fields here. You may find you need it for your assessment.

There are many more functions and approaches for data manipulation in pandas that you can read up on later. We have covered the main ones here that you will need in this course and to submit the coursework.

Writing a CSV file in pandas

As well as reading a CSV file, it would be useful to know how to write such a file using pandas. All you need to, once the data are in a pandas dataframe, is to call to_csv:

import pandas as pd

x = list(range(100))

# loop to generate y = x*x

y = [xi * xi for xi in x]

# load into pandas

df = pd.DataFrame({'x data':x,'y data':y})

df.head()

| x data | y data | |

|---|---|---|

| 0 | 0 | 0 |

| 1 | 1 | 1 |

| 2 | 2 | 4 |

| 3 | 3 | 9 |

| 4 | 4 | 16 |

from pathlib import Path

# save as csv without the index

df.to_csv(Path('work/dataset.csv'),index=False)

Exercise 2

Read and print the data in the file 'work/dataset.csv

Summary

In this section, we have used Path and URL classes to open streams from files and URLs. We have seen how to use this to read the stream into packages that can interpret various formats of data, such as yaml, json, and CSV and tabular data.

We have seen that using these object-oriented classes to deal with files and URLs means that we can use essentially the same functions throughout.

We have come across the pandas package for reading tabular and similar datasets.

pandas:

| Command | Comment |

|---|---|

pd.read_csv(f) |

Read CSV data from file or URL f |

pd.read_table(f) |

Read table data from file or URL f |

skiprows=N |

Keyword to skip N rows in reading for pd.read_table |

na_values=[-99.9] |

Keyword to set list of values to ignore (-99.9 here) |

sep |

Keyword to define field separator |

engine='python' or engine='C' |

Keyword to set reading engine. python is more flexible, but C is faster. |

df.transpose() |

Transpose (rows->columns, columns->rows) pandas dataframe df |

df.head(N) |

first N lines of data (default 5) |

df.columns |

list-like object of column headings |

df[cname] |

Select column with name cname |

df[[c1,c2,c3]] |

Select columns with names c1, c2 and c3 |

pd.DataFrame(list,columns=cnames) |

Create pandas dataframe from information in list-like structures list with names from list cnames |

pd.to_datetime(str_list) |

convert list of date strings (e.g. of form YYYY-MM-DD) to datetime representation |

df[datetimes].dt.month |

month from datetime field fromn datetime-format column with name datetimes |

df[datetimes].dt.year |

year from datetime field fromn datetime-format column with name datetimes |

df[datetimes].dt.day |

day from datetime field fromn datetime-format column with name datetimes |

df.to_csv(filename,index=False) |

Write dataframe df to CSV format file, with no index column |